转载自:https://katyscode.wordpress.com/2021/01/24/reverse-engineering-adventures-brute-force-function-search-or-how-to-crack-genshin-impact-with-powershell/

————————————————————————————

Today, I thought we’d have a bit of fun and show you a novel and unorthodox alternative way to find any function with known discrete inputs and an assumption about the possible outputs in a compiled application – specifically for this example, a decryption function in a game. We’re going to crack it with PowerShell.

Well, sort of… far be it from me to troll my dear readers with a clickbait title, but there is an element of truth in this. At the very least, to perform this attack your life will definitely be easier if you have some kind of scripting language on hand.

The technique I’m about to describe can be applied to any application which you know contains a specific function with particular arguments and return type, but don’t know where it is located in the binary file.

Problem description

You want to find a function in an executable image. You know the function arguments and return type, but the assembly code is extremely obfuscated and difficult to disassemble, trace or decompile. What can we do?

What if… we just call every address in the image until one of them spits out the expected result?

This can be considered a kind of brute-force attack where the key space is the possible address range of code in the image. It might also be thought of as a kind of reverse fuzzing, where we deliberately pass invalid arguments to every function in an application except for one – where the arguments have been pre-chosen to be valid – to distinguish it from the rest.

Your first thought might be to simply write a piece of code which loads the image and calls every address in a for loop with the desired arguments, then checks the result. Unfortunately, this won’t work. Most of the time you will be calling into the middle of a function, or in the case of architectures such as x86 which uses variable instruction length, you may also be calling into the middle of an instruction that may end up being an invalid opcode.

Many of the calls you make to valid instructions will lead to stack corruption, as the end of the function will pop values off the stack that were never pushed on at the start, since those instructions were skipped. The majority of the rest of the calls will result in the function using invalid or uninitialized register or memory data, which will lead to undefined behaviour and quite probably crash the calling process.

The way we can deal with this is by using two processes: one which calls a specified address in the image – the “client process”, and a second process which contains a for loop that repeatedly invokes the first process for each address – the “host process”. If the client process crashes, the host process simply moves to the next iteration of the loop and re-invokes it.

About today’s case study

(you can skip this section if you don’t care about Genshin Impact; the details are not relevant to the main exercise in the article)

miHoYo’s open world action RPG Genshin Impact – or Gacha Impact as I like to call it – has seen a surge of popularity since it was launched in September 2020. It uses more or less the same protection from automatic reverse engineering by IL2CPP tools like Il2CppInspector as Honkai Impact, but with a substantially higher level of security:

- process memory can no longer be dumped by tools like ProcDump (in Genshin Impact 1.1)

- assembly instruction-level obfuscation is used to prevent the code from being decompiled in both the main

exeandUnityPlayer.dll UnityPlayer.dllnow has anti-debugging countermeasures

They’re learning! There are also a couple of minor kinks:

- some additional initialization is required prior to the metadata decryption step. Without this, one extra XOR step will be required after decrypting

global-metadata.datto fully recover the plaintext. The initialization code is heavily obfuscated but easily mitigated by determining the required XOR transformation from the partially decrypted file (see end of article for more details) - the fake decoy API

il2cpp_thread_get_namein the main game DLL which receives pointers to the decryption functions fromUnityPlayer.dllwas renamed toil2cpp_init_security(another decoy API name) and the “authentication check” was removed

I have produced an extensive four-part series on how to reverse engineer Honkai Impact for IL2CPP tooling, so refer to those articles for more details – virtually all of it is equally applicable to Genshin Impact and you can use exactly the same techniques to reverse engineer this game.

I’ll be working with Genshin Impact 1.1 today but the technique should be equally applicable to any version. Full disclosure, I did not reverse engineer it in the traditional way first, so there was no cheating involved!

The function

In this example, we shall look for a function which has the following signature:

uint8_t *Decrypt(uint8_t *encryptedData, uint32_t length);

The function receives a blob of encrypted data and the length of the data as its input arguments, and returns a pointer to a decrypted blob of the same length. We have the encrypted data stored in a file (which in this case is called global-metadata.dat) and therefore also know its length. We also make assumptions about the content of the decrypted data. We have to know something – anything – about the output so that we can validate any results we get to check if we have found the correct function.

In this case, the input file contains repeating blocks of 0x40 bytes that are encrypted, surrounded by unencrypted areas. For example:

002408C0 8F 5F 00 00 04 00 00 00 95 5F 00 00 05 00 00 00

002408D0 8F 5F 00 00 04 00 00 00 95 5F 00 00 05 00 00 00

002408E0 8F 5F 00 00 04 00 00 00 95 5F 00 00 05 00 00 00

002408F0 8F 5F 00 00 04 00 00 00 95 5F 00 00 05 00 00 00

00240900 1E 15 01 FB 76 FE F7 35 86 B4 B2 58 A1 58 46 04

00240910 30 37 2D 6A B0 65 87 77 A2 AA 8C D6 CD 33 EE 1F

00240920 99 EB F9 B9 8E 2E 7B 98 52 77 62 FA D2 8B 73 C3

00240930 75 33 91 28 42 4D 4E 2E 49 23 C6 91 58 AE F6 F8

00240940 8F 5F 00 00 04 00 00 00 95 5F 00 00 05 00 00 00

00240950 8F 5F 00 00 04 00 00 00 95 5F 00 00 05 00 00 00

00240960 8F 5F 00 00 04 00 00 00 95 5F 00 00 05 00 00 00

00240970 8F 5F 00 00 04 00 00 00 95 5F 00 00 05 00 00 00

In this portion of the file, part of a table is encrypted. We can reasonably assume that the data at offsets like 0x240908 is 95 5F 00 00 05 00 00 00, or at the very least that the last byte of this sequence is zero if nothing else.

It doesn’t matter if you don’t have access to this insight: if the entire file is encrypted, but you know the decrypted result contains some string, some sequence of bytes, starts or ends with a particular byte etc., you can just search the output to see if it matches your criteria after each function call. This is called a known plaintext attack.

For this particular example, we don’t know for sure the function exists, but we are assuming it does based on the fact that previous, less obfuscated versions of the game have a function with the same behaviour, derived from static analysis of those versions. The encryption algorithm may be different in the image being analyzed, but that doesn’t matter as long as the function signature is the same: the goal is to decrypt the data, not to discern the algorithm itself.

WARNING: The following exercise deliberately calls unknown code. Always use a VM or other sandbox for this kind of work and never run the code as an administrator. An unscrupulous developer can easily throw in a function that wipes your hard drive, and other corruption could occur by accident as a result of invalid or uninitialized data.

Watch out, it’s the fuzz!

We begin the proceedings by creating a small C# project which will load the image, call the function at the virtual address specified as a command-line argument and check the result.

Immediately we hit a stumbling block: certain kinds of unmanaged exceptions such as stack overflows and access violations (attempts to access memory outside the bounds of the process, or to write or execute read-only memory) are converted to corrupted state exceptions (CSEs) in .NET. By default, these are not handled and the application will crash.

.NET Framework 3.5 and onwards provide a mechanism to handle this scenario by adding the HandleProcessCorruptedStateExceptions and SecurityCritical attributes to a method which may generate CSEs. They can then be captured as a regular managed exception in a try...catch block. As of .NET Framework 4.0, the SecurityCritical attribute is no longer required.

using System;

using System.IO;

using System.Runtime.ExceptionServices;

using System.Runtime.InteropServices;

// ...

[DllImport("kernel32.dll", SetLastError = true, CharSet = CharSet.Auto)]

private extern static IntPtr LoadLibrary(string path);

[DllImport("kernel32.dll")]

private extern static bool FreeLibrary(IntPtr hModule);

// ...

[UnmanagedFunctionPointer(CallingConvention.Cdecl)]

private delegate IntPtr DecryptDelegate(byte[] encryptedData, int length);

// ...

[HandleProcessCorruptedStateExceptions]

public static void Main(string[] args) {

var hModule = LoadLibrary("UnityPlayer.dll");

var encryptedData = File.ReadAllBytes("global-metadata.dat");

var length = encryptedData.Length;

var offset = (int) (Convert.ToInt64(args[0], 16) - 0x180000000);

var rva = hModule + offset;

var Decrypt = (DecryptDelegate) Marshal.GetDelegateForFunctionPointer(rva, typeof(DecryptDelegate));

Console.WriteLine($"Trying {offset:x8}");

try {

var decrypted = Decrypt(encryptedData, length);

byte[] decryptedData = new byte[length];

Marshal.Copy(decrypted, decryptedData, 0, length);

Console.WriteLine("Saving...");

File.WriteAllBytes($"possibly-decrypted-{offset:x8}.dat", decryptedData);

}

catch (Exception ex) {

Console.WriteLine("Exception thrown - " + ex.GetType().Name + " - " + ex.Message);

}

finally {

FreeLibrary(hModule);

}

}

CSE handling is not implemented in .NET Core (any version) or .NET 5, and the attribute type is defined but has no effect. Therefore we cannot use .NET Core for this task, so I have compiled the code below against .NET Framework 4.8.

We don’t strictly need to handle CSEs and can just let the process crash, but we may want to produce logging output to help in our analysis later. By handling them, we have the opportunity to write to a log file before terminating the process.

Here then is our first stab at the code:

Lines 7-11 import LoadLibrary and FreeLibrary from the Win32 API to enable us to load an unmanaged DLL at runtime.

Lines 14-15 define the calling convention and signature of the function we wish to find.

Line 20 loads the target image (UnityPlayer.dll in this case) and lines 21-22 prepare the function arguments. In this case we load the encrypted data file and retrieve its length.

We will supply the virtual address of the function to call as the command-line argument. Line 24 interprets the hex string supplied on the command-line as an integer, and subtracts the image’s preferred base address (which you can find from the image file’s PE header), leaving us with the file offset of the address to call. Line 25 then adds this offset to the actual image base address after it is loaded in memory to determine the memory location to call into.

Line 27 creates a managed delegate that allows us to call the desired function at the memory address we just calculated, with the correct argument and return types.

Line 32 attempts to actually invoke the function in the image, and more often than not will throw an exception.

Lines 34-35 retrieve the result of the function call (a byte array in this case) and line 38 saves it to a file so that we can inspect it, suffixing the filename with the address offset used so that if we are successful we’ll actually know the correct function address.

Line 41 handles any thrown exceptions including CSEs and line 44 unloads the DLL from memory, in a finally block so that it executes whether or not an exception was thrown.

Once we’ve compiled the project, we can invoke it on the command line as follows:

./FunctionSearch.exe 1800123456

Output:

Trying 00123456

Nothing else happens in this case, indicating some other kind of unhandled unmanaged exception that just causes the process to terminate. This is fine and will be the case much of the time.

Host in the shell

We can now construct a very simple PowerShell script to call this process in a loop with every possible address, as follows:

for ($a = 0x180000000; $a -le 0x1FFFFFFFF; $a++)

{

$aHex = $a.ToString('x')

Start-Process -FilePath ./FunctionSearch.exe -ArgumentList "$aHex" -NoNewWindow

}

The first line of the loop converts the loop value into a hexadecimal string. The second line creates a new process for our C# code, passing in the address argument. The -NoNewWindow switch redirects output to the existing PowerShell console rather than opening a new window for each new process.

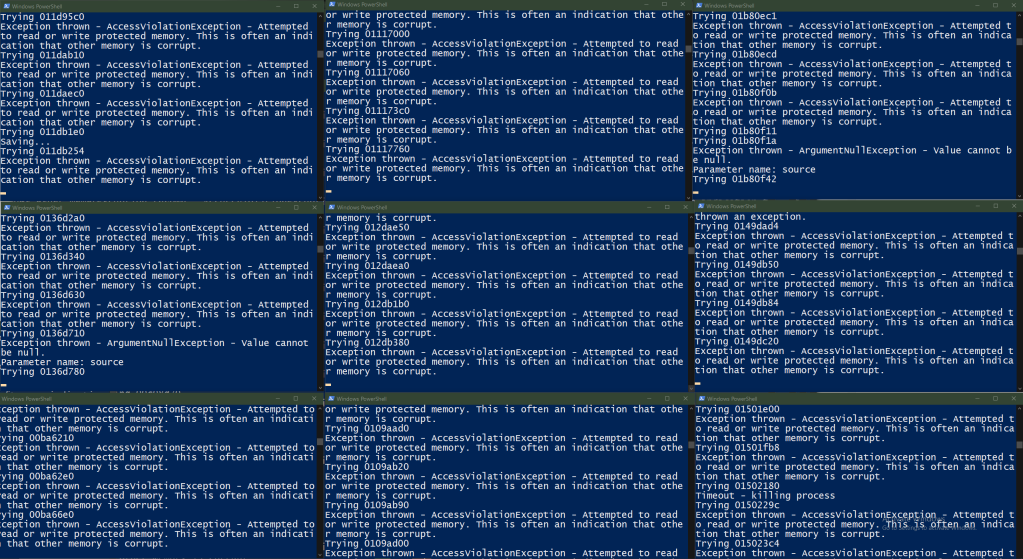

This script does not wait for the process to end before starting another one, so it will essentially queue them up as fast as your hardware allows. The output looks like this:

Trying 00000000 Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt. Trying 00000001 Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt. Trying 00000002 Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt. Trying 00000003 Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt. Trying 00000004 Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt.

The client process crashes most of the time, but the host script continues to start new processes with new address arguments without any problems.

The Need For Speed

The image file I’m attacking here is 32.3MB – 0x205B870 possible addresses. Execution time per call will vary, but on my 24-core machine – and with the files stored on an SSD – this script iterates through about 0x200 (512) functions per minute and adds 7% to the CPU consumption. That means it will take 46 days to test every address – just a whisker shy of how long it takes to count votes in Pennsylvania. Statistics dictate that on average you’ll have to test half of the addresses in any given image to find the correct one, so we’re looking at 23 days on average per similar DLL we have to iterate through. It’s fair to say we are travelling at less than 50mph.

We can improve the search efficiency by pruning the search space. We know that the majority of addresses are mid-function or mid-instruction, and a good portion will not even be in the code section(s) of the image. If IDA or your disassembler of choice is able to find the start of every function (assuming the assembly-level obfuscation is not to a level that completely destroys its ability to sweep through the code – if it is you’re out of luck and will have to skip to the next section), we can dramatically reduce the number of addresses we have to test.

The plan is to build a text file containing a list of potential addresses to scan, and modify our script to read lines from this file instead of using a numeric for loop.

Here is how to do this with IDA, but you can achieve similar results with other disassemblers:

- Load the image into IDA

- Wait for a really long time. Hopefully not longer than 46 days, but you never know with IDA. IDA will populate the Functions window (Shift+F3) with most of the functions it finds within a few minutes, but for an absolutely complete list, you may need to wait several hours

- Right-click in the Functions window and choose Copy all, or just press Ctrl+Shift+Ins (because IDA loves keyboard shortcuts that make sense)

- Create a new document in a text editor that supports regular expression replacement and paste in the function list (Visual Studio Code: Ctrl+N, Ctrl+V). You will now have text that looks like this:

Function name Segment Start Length Locals Arguments R F L S B T = sub_180001000 .text 0000000180001000 00000003 00000000 00000000 R . . . . . . sub_180001020 .text 0000000180001020 00000004 00000000 00000000 R . . . . . . sub_180001030 .text 0000000180001030 000000BB 00000018 00000038 R . . . B . . sub_1800010EB .text 00000001800010EB 000000A9 00000018 00000040 R . . . B . . sub_1800012CC .text 00000001800012CC 00000138 00000018 0000002C R . . . B . . sub_180001404 .text 0000000180001404 000000D9 00000018 0000002C R . . . B . .

- Delete the first line

- Replace all using the regex search term

^(.?)\t(.?)\t([0-9A-Fa-f]{16}).*$and the replacement term$3. This regex isolates the address as the 3rd match group and discards everything else on the line (Visual Studio Code: Ctrl+H, Alt+R, enter regex, Tab, enter replacement, Ctrl+Alt+Enter). The file will now look like this:

0000000180001000 0000000180001020 0000000180001030 00000001800010EB 00000001800012CC 0000000180001404

- Save it as

function-list.txtin the same folder as the script

We will now modify the script we created above to read in each line of the file sequentially and pass it as an argument to the client process:

$functionListFile = "function-list.txt"

foreach ($line in Get-Content $functionListFile)

{

Start-Process -FilePath ./FunctionSearch.exe -ArgumentList "$line" -NoNewWindow

}

The output now looks like this:

Trying 00001030 Trying 00001020 Trying 000010eb Trying 00001000 Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt. Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt. Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt. Trying 0000167e Trying 000012cc Trying 00001404 Trying 000014e0 Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt. Exception thrown - AccessViolationException - Attempted to read or write protected memory. This is often an indication that other memory is corrupt.

(note that output and error messages are displayed out of order since multiple client processes can be running simultaneously and at different stages of execution, and all are sharing the same console)

For this example, this narrows the search space from almost 34 million addresses to a scant 77,033 – a handy reduction by a factor of 50. Execution time goes up, however, as more actually valid code is executed. This time my machine chewed through 350 per minute for an estimated total runtime of 220 minutes, or about 3.6 hours – not great, not terrible.

Stop! In The Name of Mov

There is a problem with our script as it stands now: sometimes the client process will hang as the function call results in an infinite loop or fails to acquire a spinlock or mutex. This will eventually fill up the available memory with hung processes, and possibly bring down the entire machine. We can mitigate this by allowing the process a certain amount of time to execute, then killing it if the timeout expires. The following updated script accomplishes this:

$functionListFile = "function-list.txt"

foreach ($line in Get-Content $functionListFile)

{

$proc = Start-Process -FilePath ./FunctionSearch.exe -ArgumentList "$line" -PassThru -NoNewWindow

$timeout = $null

$proc | Wait-Process -Timeout 10 -ErrorAction SilentlyContinue -ErrorVariable timeout

if ($timeout) {

echo "Timeout - killing process"

$proc | kill

}

}

There are a few points of interest here. Normally, Start-Process does not return anything. By adding the -PassThru switch, a Process object will be returned, which we store in $proc and can use to query and control the process after it starts (line 5).

Line 8 pipes the stored Process object to Wait-Process, which waits for a process to exit. By specifying the -Timeout option, we instruct the cmdlet to wait for a maximum number of seconds, after which the script will continue executing regardless of whether the client process has exited or not.

If a timeout has occurred, the variable whose name we pass to Wait-Process in the -ErrorVariable action will be non-null. We check for this on line 10, and kill the process on line 12 in the event of a timeout. This is not a very clean or elegant way of ending a process, but it works for our purposes.

This solution guarantees that the script won’t get stuck or exhaust the available hardware resources, but it also has the side effect of only allowing one client process to run at a time, since we wait to see whether it times out or not before starting the next one. This is actually good for accurate logging to understand what’s happening on each call, but bad for search efficiency. Now we’re only hitting 44 calls per minute, for a total maximum runtime of 29 hours. Ouch.

50mph is not going to be enough. We need to get all the way to 88mph.

Where we’re going, we don’t need code

Even on a low-end PC, we won’t be coming close to saturating the available compute resources with our current solution. Unless the storage media is a bottleneck, we can improve performance by splitting the work up into threads to increase CPU utilization. Tasks like this where each test can run independently are an excellent candidate for parallelization, as there are no synchronization considerations to worry about.

In this demo, we’re actually going to spin up a large number of PowerShell instances, so we will be splitting the work across processes rather than threads. Creating a process is – relatively speaking – computationally expensive, but we will only perform this spin-up at the beginning of the search so it’s not a big deal in this case. The plan is to run multiple instances of the script we’ve already created in parallel, in separate processes, and give each instance a different set of addresses to scan.

First, we need to split the function address list up into chunks, one chunk for each host process. If you skipped the previous section because your target code is obfuscated in a way that prevents you from deriving the function addresses, you can instead split up the entire address range into chunks instead.

Tip: If you don’t have a list of function address candidates and need to scan every address, just use the range in the .text section and any other code/executable sections. The .data and related sections are unlikely to contain code. In the event you don’t find anything after scanning the code address ranges, you can try to search the data address ranges as a last resort.

We can sit in our text editor and copy paste all day, or we can write another PowerShell script to do it for us. This is especially useful if we decide to change the chunk size (to have more or less processes running at once) or if we need to regenerate the address range from scratch and split it up again.

Here is the script:

param ([string] $fileString,[int] $LineNumberToSplitOn) $file = [System.IO.FileInfo] (Resolve-Path $fileString).Path$DirectoryContainingFiles = $file.Directory$FileContent = Get-Content $(Get-Item $file.FullName)$LineCount = $FileContent.Count$TotalNumberOfSplitFiles = [math]::ceiling($($LineCount / $LineNumberToSplitOn)) if ($TotalNumberOfSplitFiles -gt 1) {for ($i=1; $i -lt $($TotalNumberOfSplitFiles+1); $i++) {$StartingLine = $LineNumberToSplitOn * $($i-1)if ($LineCount -lt $($LineNumberToSplitOn * $i)) {$EndingLine = $LineCount}if ($LineCount -gt $($LineNumberToSplitOn * $i)) {$EndingLine = $LineNumberToSplitOn * $i} New-Variable -Name "$($file.BaseName)_Part$i" -Value $($FileContent[$StartingLine..$EndingLine]) -Force $(Get-Variable -Name "$($file.BaseName)_Part$i" -ValueOnly) | Out-File "$DirectoryContainingFiles\$($file.BaseName)_Part$i$($file.Extension)"}} |

The details are rather boring, but it essentially takes two arguments: the text file and the line number multiple to split on, calculates the number of files needed, then writes them all with “_PartX” appended.

Usage:

./split-text.ps1 function-list.txt 1500 |

This will split the list into chunks of 1500 addresses:

Mode LastWriteTime Length Name---- ------------- ------ -----a---- 24/01/2021 05:43 54038 function-list_Part1.txt-a---- 24/01/2021 05:43 54038 function-list_Part10.txt-a---- 24/01/2021 05:43 54038 function-list_Part11.txt-a---- 24/01/2021 05:43 54038 function-list_Part12.txt-a---- 24/01/2021 05:43 54038 function-list_Part13.txt-a---- 24/01/2021 05:43 54038 function-list_Part14.txt... |

We replace the hard-coded $functionListFile in our previous script with a command-line argument so that we can specify a different address range file for each instance:

param ([string] $functionListFile) foreach ($line in Get-Content $functionListFile){$proc = Start-Process -FilePath ./FunctionSearch.exe -ArgumentList "$line" -PassThru -NoNewWindow $timeout = $null$proc | Wait-Process -Timeout 10 -ErrorAction SilentlyContinue -ErrorVariable timeout if ($timeout) {echo "Timeout - killing process"$proc | kill}} |

Finally, we need a startup script to launch an instance of the search script for each of the search ranges (text files):

for ($i = 1; $i -le 52; $i++) {Start-Process -FilePath "powershell.exe" -ArgumentList "-Command ./search-script.ps1 function-list_Part$i.txt"} |

(in this example, splitting the list up into 1500-item chunks generated 52 files – change as appropriate for your application)

This script starts one instance for each address range file without waiting for any of them to finish, so they all run simultaneously. This is going to spew a massive amount of PowerShell windows onto your screen. Don’t be afraid!

You should also see close to 100% CPU utilization. If you don’t, split the function list into smaller chunks and start more instances. If your PC falls over into a crumpled heap, split the function list into larger chunks and start less instances! One instance per CPU hardware thread is a good starting baseline.

The work will not be distributed 100% evenly because some functions will take longer to execute than others, and there will also be timeouts. Rather elegantly, each PowerShell window will close once its search range completes, so you can just keep an eye on this to track the progress.

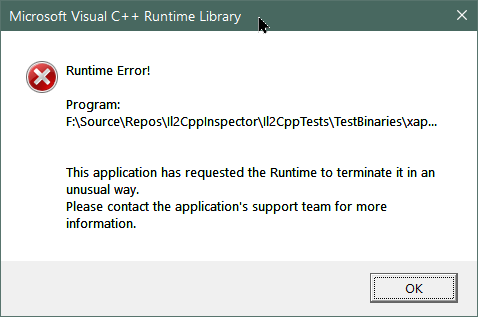

You will likely see various error dialogs as the scan runs:

Don’t worry about these. There is no need to close them: our script will kill the process after 10 seconds anyway.

Watching the scan can be highly informative about other functions in the application. Here is a sample of messages that came up during the run for our example DLL:

Trying 00c20d90open_socket: can't get ©4ùd▓ host entryvrpn_connect_udp_port: error finding host by name (¥.é┬ëïR╠└ß=═þ─Æjô┌ÀdkÅ┼mÆX(\ :ö╣D╣ë4).vrpn_udp_request_lob_packet: send() failed: No error |

Trying 00c96d40SetPauseAnimatorBelowLodLevel receive a level 38512456 which is more than 255 (Filename: Line: 770) |

On my machine, all of the PowerShell instances ended within a few minutes of each other, and the total runtime at which the last instance completed was 93 minutes – about a 19x improvement over the single-host process version, which is about what you’d expect from a 24-core CPU.

Note: This can be improved quite a bit further. You can create a pool of client processes per host process without waiting for them to finish, then each time a process in the pool completes or times out, you create a new client process with the next address to test. This will allow the host script to cycle through other client processes while one of them is hung.

You make the startup script count the number of files matching a filter like function-list-Part*.txt so that the loop counter upper bound is not hard-coded.

You can alter the text file split script to specify how many chunks you want instead of what line to split on.

You can also dispense with splitting the file altogether and simply alter the host script to take the starting line number and a count as input, and change the startup script to use a numeric for loop with the count per host script as the stepping factor. This is actually a subjectively better solution, but I didn’t have time to rework the script in time for this article unfortunately.

In League with The /dev/null

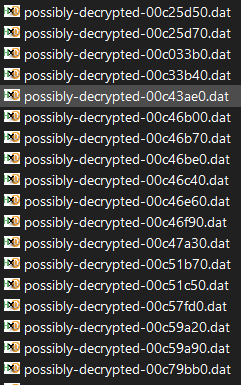

We’ve set up our scanner nicely, but so far we haven’t actually done anything with the results. If the function you’re looking for returns an int or some other simple type, we’ve just discarded it. If you are outputting another file, you probably ran out of disk space:

The demo scan above generated 2,292 files @ 32.3MB each for a cool 73GB consumed disk space. Yikes.

Let’s add some checks and filters to the client process so that it can test if the return value contains or at least resembles the expected results. The code to implement this is completely dependent on the target function at hand, but I will demonstrate my approach for this encrypted file with some general rules of thumb.

If you are dealing with a pointer to a returned block of memory as in this case, there are some things to bear in mind:

- some functions will initialize a block of memory with all zeroes. Of the files generated by our scan, quite a lot of them were just all-zero, or had large all-zero areas

- some functions will copy the input, ignore the input arguments (because the real function being called doesn’t take any arguments) or otherwise have no noticeable effect, and the output will be the same as the input, or garbage

- some functions will initialize a fixed amount of memory, meaning the start of the return data will be zeroes or meaningful data, and the rest will be garbage

The upshot of all this is that if we’re working with a large data set – as we are here with a 32MB byte array – you will want to try and avoid writing filters that examine the beginning of the data where possible. This is the area that is most likely to trigger the most false positives when you try to write code that analyzes it for validity automatically.

That being said, you also need to be very careful not to be too aggressive with your filtering, otherwise you may accidentally filter out the correct function – and indeed this happened to me as I was producing this example, since an area I assumed would be zeroes in the decrypted data actually wasn’t. It is generally better to have to look through 100 results by hand than narrowing it down to 10, realizing none of them are correct and having to run the entire scan again with looser filter criteria.

For illustration, I’ll describe how I filtered the results for this exercise. I started by identifying a region of the file that should definitely change once it had been decrypted, by simply scrolling through it with a hex editor:

000C02D0 16 00 00 00 5B 27 02 00 10 00 00 00 6B 27 02 00000C02E0 11 00 00 00 7C 27 02 00 0E 00 00 00 8A 27 02 00000C02F0 12 00 00 00 9C 27 02 00 0E 00 00 00 AA 27 02 00000C0300 23 20 9F 95 0C C1 C0 64 CC C9 FD 26 AA 92 3E 05000C0310 F4 7B 7A 48 9B 54 23 97 55 57 2F D2 BF 65 7A 00000C0320 38 D9 7E 08 03 B9 7E D5 D8 79 6C ED DD EC DF BD000C0330 52 3F 6A E3 64 84 1A 9C B4 85 ED C0 1D 8A 62 BA000C0340 12 00 00 00 19 28 02 00 13 00 00 00 2C 28 02 00000C0350 15 00 00 00 41 28 02 00 15 00 00 00 56 28 02 00000C0360 16 00 00 00 6C 28 02 00 0E 00 00 00 7A 28 02 00 |

As mentioned earlier, the file has periodic blocks of 0x40 encrypted bytes. The region 0xC0300-0xC033F above seems to be encrypted, and should change if we can force it to be decrypted. We select this region as the first test area and store the original bytes for this region in an array:

var testArea = 0xC0300;var testLength = 0x40; var originalEncryptedBytes = encryptedData.Skip(testArea).Take(testLength).ToArray(); |

Right before the code which saves the file, we insert a test:

// Check known encrypted areaif (decryptedData.Skip(testArea).Take(testLength).SequenceEqual(originalEncryptedBytes)) {Console.WriteLine("No change to known encrypted area");return;} |

This simply compares the original data with the data copied from the result pointer and exits the process if they are the same (the return statement will cause the finally block to execute to free the DLL, then return control to the Powershell host script).

As we just discussed, it’s possible we got back a big ol’ empty block of zeroes, so we also filter out these results:

if (decryptedData.Skip(testArea).Take(testLength).Max() == 0) {Console.WriteLine("Encrypted area is now all zeroes");return;} |

If the maximum byte value of the region is zero, then all the bytes are zero (you can also use .All(x => x == 0) if you prefer).

This deals with the no change and all-zero scenarios, but it’s all possible we get junk back. Here we do the opposite: take a region near the start of the file which should not be changed (in this case the first 0x40 bytes are encrypted and the next 0x40 bytes are unencrypted), and test it to make sure that it has not changed:

var originalUnencryptedBytes = encryptedData.Skip(testLength).Take(testLength).ToArray();// ... if (!decryptedData.Skip(testLength).Take(testLength).SequenceEqual(originalUnencryptedBytes)) {Console.WriteLine("Area changed that should be left the same");return;} |

When we make these changes and re-run the scan using just the functions that generated file output last time, the overall output resembles this snippet:

...Trying 0016ed40No change to known encrypted areaTrying 0016ed90No change to known encrypted areaTrying 0016f470Area changed that should be left the sameTrying 0016f4c0No change to known encrypted areaTrying 0016f5c0Encrypted area is now all zeroesTrying 0016f6a0Encrypted area is now all zeroesTrying 0016f810No change to known encrypted areaTrying 001a52e0No change to known encrypted areaTrying 001a7010Saving...Trying 001bce80No change to known encrypted areaTrying 001c1860No change to known encrypted areaTrying 001d6550... |

Tip: If you want to tighten the filter then re-run the scan using only the subset of candidate addresses which already produced file outputs in the last run, you can generate a new address range list based on the generated files like this:ls possibly-decrypted-*.dat | % { echo ([int] ("0x" + $_.Name.Substring(19,8)) +

0x180000000).ToString('X16') } > function-list-new.txt

This enumerates all of the generated files, extracts only the offset portion of each filename, converts the hex strings to integers, adds the image preferred base address to each, converts them back into strings and outputs the new set of addresses as a list to the specified file.

How many output files do we get now?

possibly-decrypted-001a7010.dat |

One.

Let’s take a look inside at the same region as before:

000C02D0 16 00 00 00 5B 27 02 00 10 00 00 00 6B 27 02 00000C02E0 11 00 00 00 7C 27 02 00 0E 00 00 00 8A 27 02 00000C02F0 12 00 00 00 9C 27 02 00 0E 00 00 00 AA 27 02 00000C0300 A5 2F 42 30 D5 23 B2 9C 94 2A C0 BA B5 98 A7 68000C0310 0C 00 00 00 C7 27 02 00 0D 00 00 00 D4 27 02 00000C0320 10 00 00 00 E4 27 02 00 0D 00 00 00 F1 27 02 00000C0330 09 00 00 00 FA 27 02 00 0D 00 00 00 07 28 02 00000C0340 12 00 00 00 19 28 02 00 13 00 00 00 2C 28 02 00000C0350 15 00 00 00 41 28 02 00 15 00 00 00 56 28 02 00000C0360 16 00 00 00 6C 28 02 00 0E 00 00 00 7A 28 02 00 |

Smokin’! We decrypted the data – or at least most of it. The 16 bytes at 0xC0300 are still messed up, but that is a complication unique to Genshin Impact; the main point is we have proven that the correct function has been found – and that is how you brute-force a function address with PowerShell. Nicely done!

We came, we predicted, we scripted and decrypted

(you can skip this section if you don’t care about Genshin Impact; the details are not relevant to the main exercise in the article)

In the case of our particular case study, there are still 16 bytes of not fully-decrypted data per block. By looking through the data by eye, we can easily see that this can be resolved by a 16-byte XOR operation per block. All that is required is to derive the XOR term by manual inspection. Here are a few blocks (in Genshin Impact 1.1 they occur every 0x26700 bytes on the PC version and every 0x24B80 bytes on the Android version):

12 00 00 00 9C 27 02 00 0E 00 00 00 AA 27 02 00A5 2F 42 30 D5 23 B2 9C 94 2A C0 BA B5 98 A7 680C 00 00 00 C7 27 02 00 0D 00 00 00 D4 27 02 00...03 00 00 00 B2 65 09 00 12 00 00 00 C4 65 09 00BB 2F 42 30 BD 61 B9 9C 81 2A C0 BA F8 DA AC 6806 00 00 00 FC 65 09 00 1A 00 00 00 16 66 09 00...00 00 00 00 FF FF FF FF 02 00 91 00 FF FF 00 00C3 8D 00 06 B9 00 00 00 6B 33 00 00 96 1C 00 0002 23 1B 00 55 C7 01 00 FF FF FF FF CB F4 00 00FF FF FF FF 22 7E 00 00 D0 33 01 00 1F 00 00 00AD 2F 42 30 98 FB 4F 63 9C 2A 56 BA F1 40 A5 68C4 8D 00 06 B9 00 00 00 6B 33 00 00 F8 4F 00 0002 23 1B 00 56 C7 01 00 FF FF FF FF CC F4 00 00FF FF FF FF 7D 56 00 00 D1 33 01 00 60 00 00 0000 00 00 00 FF FF FF FF 02 00 96 00 FF FF 00 00...03 00 00 00 4A F2 00 00 03 00 00 00 4D F2 00 0003 00 00 00 50 F2 00 00 03 00 00 00 53 F2 00 00AE 2F 42 30 31 F6 B0 9C 9E 2A C0 BA 57 4D A5 6803 00 00 00 5C F2 00 00 03 00 00 00 5F F2 00 0003 00 00 00 62 F2 00 00 03 00 00 00 65 F2 00 00 |

I chose these more or less at random. The XOR term can be derived based on the surrounding context. If there is an obvious pattern in the previous or following group of bytes, you can XOR an encrypted byte with the corresponding unencrypted byte to derive part of the term as follows:

- Block 1:

?? 2F 42 30 ?? (23^27) (B2^02) 9C ?? 2A C0 BA ?? (98^27) (A7^02) 68 - Block 2: no further information

- Block 3: no further information

- Block 4:

(AE^03) .. .. .. ((3^5) << 4 + ?) .. .. .. (9E^03) .. .. .. ((5^5) << 4 + ?) .. .. ..

XOR term so far:

AD 2F 42 30 6? 04 80 9C 9D 2A C0 BA 0? BF A5 68 |

To find the remaining two nibbles we can save a bit of time by searching the file for parts of the term we have already found to try to find an obvious solution. We try AD 2F 42:

00 00 00 01 00 00 00 02 00 00 00 01 00 00 00 0200 00 00 03 00 00 00 00 00 00 00 00 00 00 00 00AD 2F 42 30 67 04 B0 9C 9D 2A C0 BA 0E BF A5 6800 00 00 00 00 00 00 00 00 00 00 00 00 00 00 0000 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |

The non-zero numbers only occur every 4th byte so we can safely conclude the missing bytes are 67 and 0E for a final term of:

AD 2F 42 30 67 04 80 9C 9D 2A C0 BA 0E BF A5 68 |

And that’s it! By calling the decryption function, then XORing the first 16 bytes of each encrypted block with this value, we can now fully decrypt the metadata file.

Might as well JMP

The amount of code we reverse engineered in this exercise was zero. We merely used prior knowledge from a previous, less obfuscated product and a visual survey of the input data. This completely defeats all of the extra security measures employed with relative ease.

Setting up the initial test harness is a bit cumbersome, but once in place it can now be re-used for any function in any application with near-zero effort. All you need to do is change the function delegate, input arguments and output filter in the C# client code and generate a new list of addresses to scan.

Since the function we found in this particular DLL is a hidden export, the developers are going to have a hard time preventing this kind of attack. While significantly hardening the application against both static and dynamic analysis via assembly-level obfuscation and anti-debug traps, they completely neglected to consider that none of it matters if you can trivially find the entry point. It’s like building a huge layer cake with tunnel vision, and not realizing someone can just stick their finger straight into the lowest layer and lick off the chocolate.

While the developers could change the function signature, this would be a temporary workaround at best. Perhaps least bad solution here is probably to move the decryption functions into the game binary so they are not imports, and inline them in another function so there is no obvious entry point. This technique can be further improved by adding an argument to the enclosing function as a behaviour (code path) selector – perhaps merging a large collection of other functions together – so that the intent becomes very unclear. Of course, this will significantly impact the readability and maintainability of the original source code, especially if the merged functions require different argument types.

Another option could be to split the logic of the single target function into multiple functions to increase the search complexity. One will have to be aware of and find multiple functions, and call them in the correct order. The actual search time would only increase linearly with the number of additional functions; however, deriving the correct arguments for each could increase the complexity exponentially if implemented correctly. If the attacker does not know the function arguments, then the overall search space is a factor of the image size, the number of functions to find and the possible range of inputs to each.

As obfuscation developers find ever-more intricate mechanisms to deploy, so too must analysts learn to think outside the box and pursue attack vectors the obfuscation authors may not have thought of. The attack I outlined today is certainly not new and I take absolutely no credit for it, however I haven’t seen much coverage of it on the internet with practical working examples so I hope you found it insightful! Obfuscation techniques will continue to evolve inexorably, as will the tooling available to reverse engineer them. This cat and mouse game has been in progress since long before the rise of the modern internet. The cats are always on the prowl – and whenever they try something new, this mouse will be here, waiting for them.